Introduction:

Imagine delving into the intricate world of artificial intelligence where the slightest tweak can drastically enhance performance. In this article, we explore the art of prompt tuning—a powerful technique revolutionizing AI model optimization. Join us as we uncover expert strategies and insights to empower your AI projects. Our guide is curated by seasoned AI professionals, ensuring you gain practical knowledge to propel your models to new heights.

Headings:

- What is Prompt Tuning?

- Why is Prompt Tuning Important?

- Techniques and Strategies for Effective Prompt Tuning

- Case Studies: Successful Applications of Prompt Tuning

- Challenges and Considerations

- Future Trends in Prompt Tuning

1. What is Prompt Tuning?

Prompt tuning involves crafting or adjusting prompts, which are natural language instructions or questions given to AI models, to improve their performance on specific tasks. Unlike traditional training methods that rely solely on data, prompt tuning leverages the nuanced understanding of how prompts influence model responses.

2. Why is Prompt Tuning Important?

Understanding the significance of prompt tuning lies in its ability to fine-tune AI models for specialized tasks with minimal additional training data. By refining prompts, developers can steer models to generate more accurate, relevant, and contextually appropriate responses.

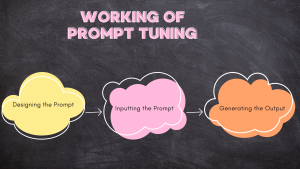

3. Techniques and Strategies for Effective Prompt Tuning

- Prompt Engineering: Crafting prompts that elicit desired responses by considering language nuances and task requirements.

- Fine-tuning Parameters: Adjusting model parameters related to prompt handling and response generation.

- Iterative Refinement: Continuously optimizing prompts based on model performance and feedback loops.

4. Case Studies: Successful Applications of Prompt Tuning

Explore real-world examples where prompt tuning has revolutionized AI applications across industries such as healthcare, finance, and customer service.

5. Challenges and Considerations

Addressing common challenges like bias in prompts, overfitting to specific examples, and maintaining generalizability across diverse datasets.

6. Future Trends in Prompt Tuning

Discuss emerging trends such as automated prompt generation, meta-learning for prompt optimization, and ethical considerations in prompt design.

Visual Table for Key Points:

| Topic | Key Points |

| What is Prompt Tuning? | Definition, Purpose, Benefits |

| Techniques for Prompt Tuning | Prompt Engineering, Parameter Fine-tuning |

| Case Studies | Industry Applications, Performance Improvements |

| Challenges | Bias, Overfitting, Generalizability |

| Future Trends | Automated Prompt Generation, Ethical Considerations |

Conclusion:

In conclusion, mastering prompt tuning equips developers and AI enthusiasts with a potent toolset to optimize AI model performance effectively. By embracing advanced techniques and staying attuned to emerging trends, you can elevate your projects to new heights of accuracy and efficiency. Dive into the world of prompt tuning today and unlock the full potential of artificial intelligence.